Artificial Intelligence Project

Goal Game Simulation

2008/2009 - 1st semester

Developed by: João Carlos Ferreira Gonçalves

Contact: jcgonc@student.dei.uc.pt

Last update: 20 February 2009

Warning! Huge page with big images ahead! High resolution recommended (developed @ 1680x1050).

Executable: ia_project.7z

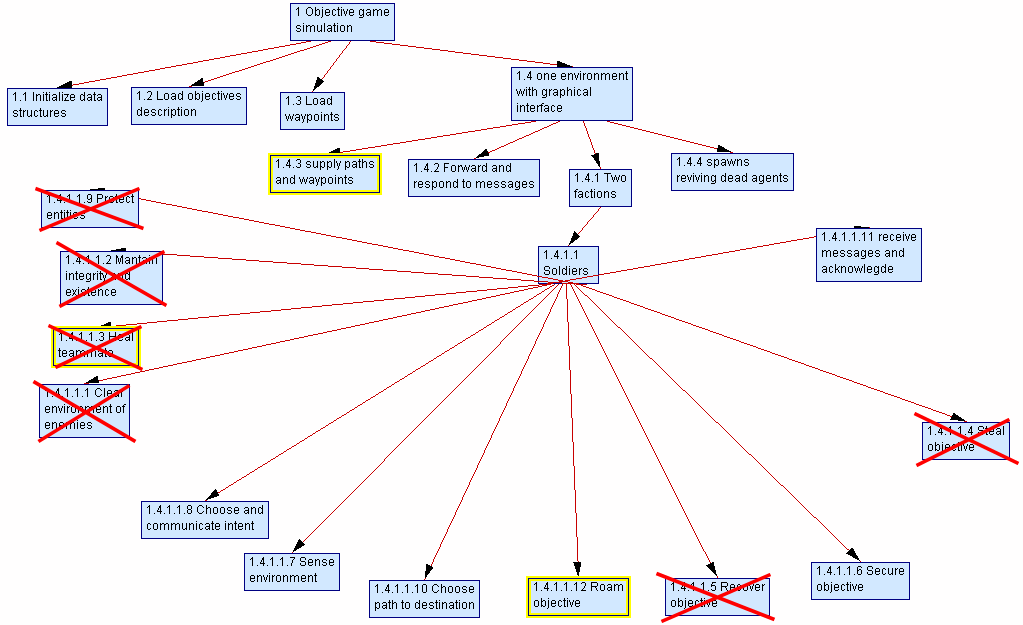

b. Use cases and sequence diagrams

e. Agent template diagram and conversations

f. System classes architecture and Ontology

This project simulates a game consisting of different goals to accomplish, played by agents of two adversary factions. The project focuses on the following details:

- Multi-Agent oriented framework;

- Communication between agents done using messages based on a common ontology;

- Agent's centre of attention are completing tasks / goals;

- Communication and negotiation of assigned tasks between the agents;

- No exploration of environment.

The two factions focus on completing certain goals, like roaming or carrying an item. Finally, the factions are composed of two kinds of agents in the following hierarchy:

-

BDI Agent Template;

-

Spawn, creates agents;

Soldier, focuses on completing goals;

-

- Environment, interacts with agents;

In the initial planning phase the project was expected to have more features and functionality, but while it was being developed some of them were considered complicated and could not been implemented on the given time. Consequently, this final version is a simplified adaptation of the initial project, so in the following diagrams those abandoned features are crossed red. In the end, most of the abandoned features are additional goals and fighting between the teams.

Resuming, fighting between the teams was removed, as well as the medic agent (specialized version of the soldier agent) and more goals.

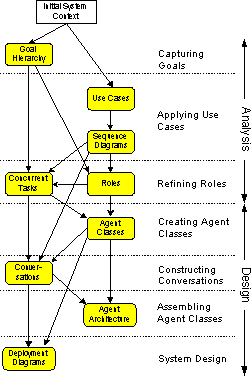

The system is developed in Java, according to a system methodology called MASE, from Multiagent Systems Engineering Methodology. This design principle is a variation of the cascade methodology used in software design, but focussed to the development of multiple agent systems. The methodology is applied in two big phases, titled Analysis and Design, which are broken in smaller steps, like in the following diagram.

The analysis and design are done with the tool 'agentTool', where the created diagrams are shown ahead in the report. As said before, the project was implemented in Java, but not using the library JADE. Instead, its required functionality was coded from the ground up. This includes onthology handling and message delivery. Because of this, the actual AI architecture is mono-threaded (excluding some functions) and does not use both socket and RMI communication. However, they can be easily added.

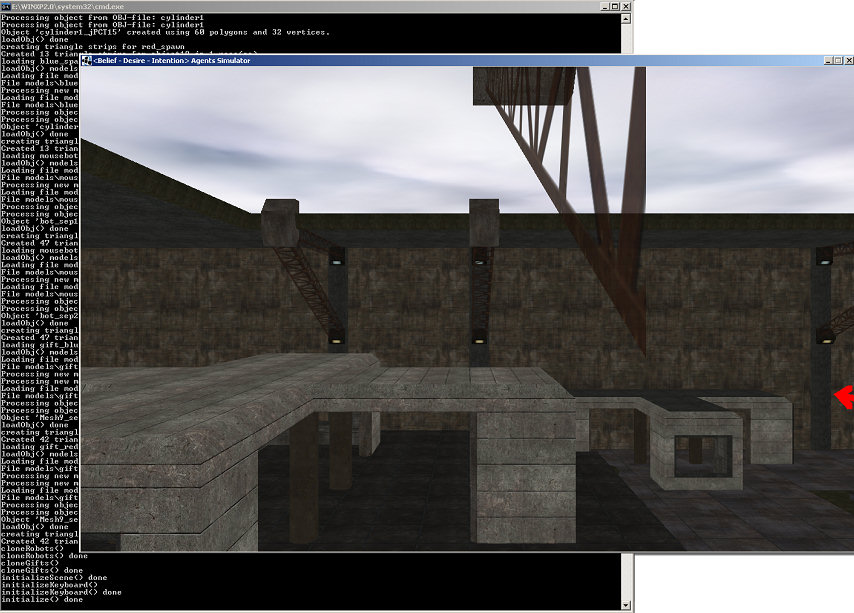

For the visuals, the project uses a simple 3D rendering / engine library called 'jPCT', hiding some details of graphics rendering and allowing the developer to easily focus on implementing the artificial intelligence logic. This API uses OpenGL on its core for hardware acceleration, giving more CPU cycles for the artificial intelligence engine.

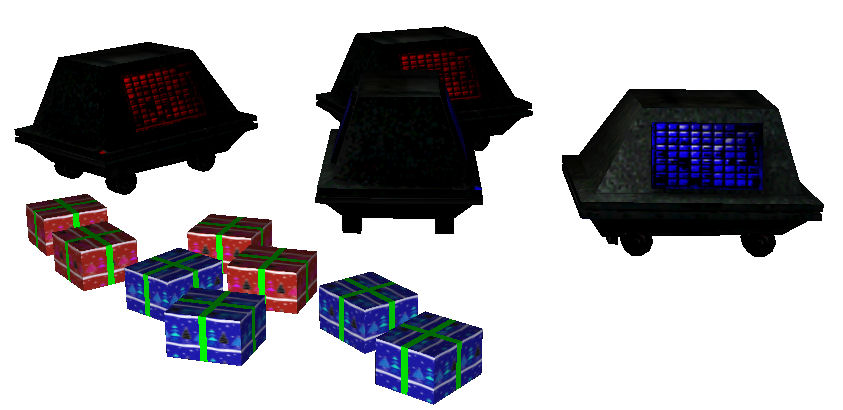

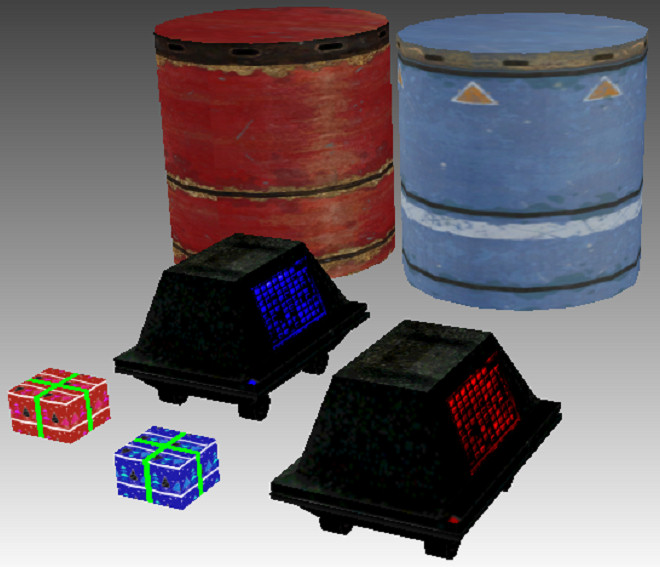

The 3D models for the agents were retrieved from Dark Forces - Jedi Knight's Enhanced (JKE), using the mouse bot's models. The models for the gifts were gotten from Google's Warehouse (after some cleaning) and the cylindrical models for the spawns, as well as the axis and the skyboxes were produced by the author of this project. The model of the map was extracted from a Wolfenstein's: ET map named 'Bzz Drunk'. The next figures show the models and the map used on this project:

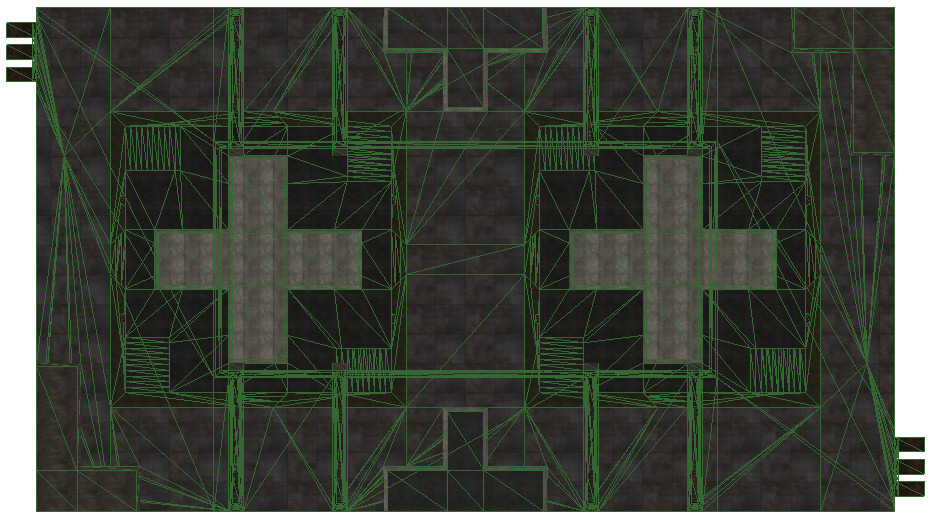

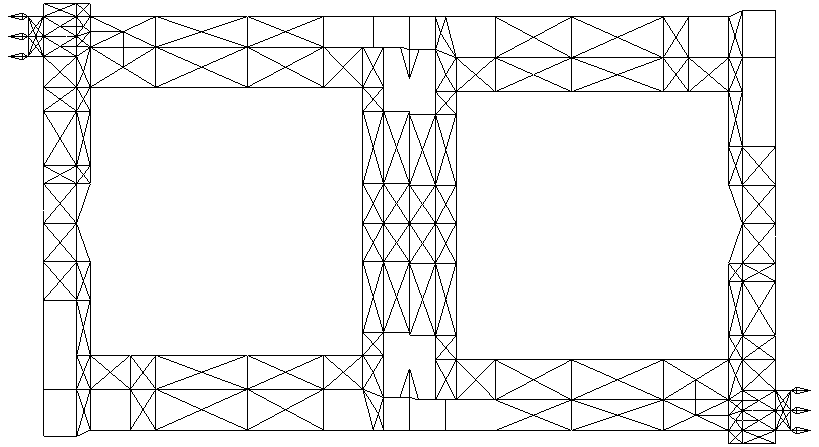

Finally, there is also another 3D model, which is not drawn on the screen. Instead it is used by the path navigation system, implemented in the agents. This file is the waypoints file and was done by the author using the map's geometry as baseline, so that the agents can travel in the scenario as real as possible. As it can be seen, the images above (map geometry) and below (waypoints) are extremely related:

The agents knowledge of the world (beliefs, entities, facts and goals) is given by 'sensing' the environment (i.e., communicating with it), as well as the communication between them (the agents). The soldier agents send messages to the environment agent asking nearby facts and their current team objectives. In this point, the communications between agents are done similarly to a radio, that is, the messages between them are delivered instantly (but through the environment).

The agents are based on a simplified form of BDI template, executing objectives in two levels. The higher level executes one "higher level" goal at a time, by their priority and 'desire' of the agent, which are the ones described ahead. The lower level implements the follow path mechanism.

There are three types of agent, extending the runtime library's (JADE) agent class: 'EnvironmentAgent', 'SoldierAgent' and 'SpawnAgent':

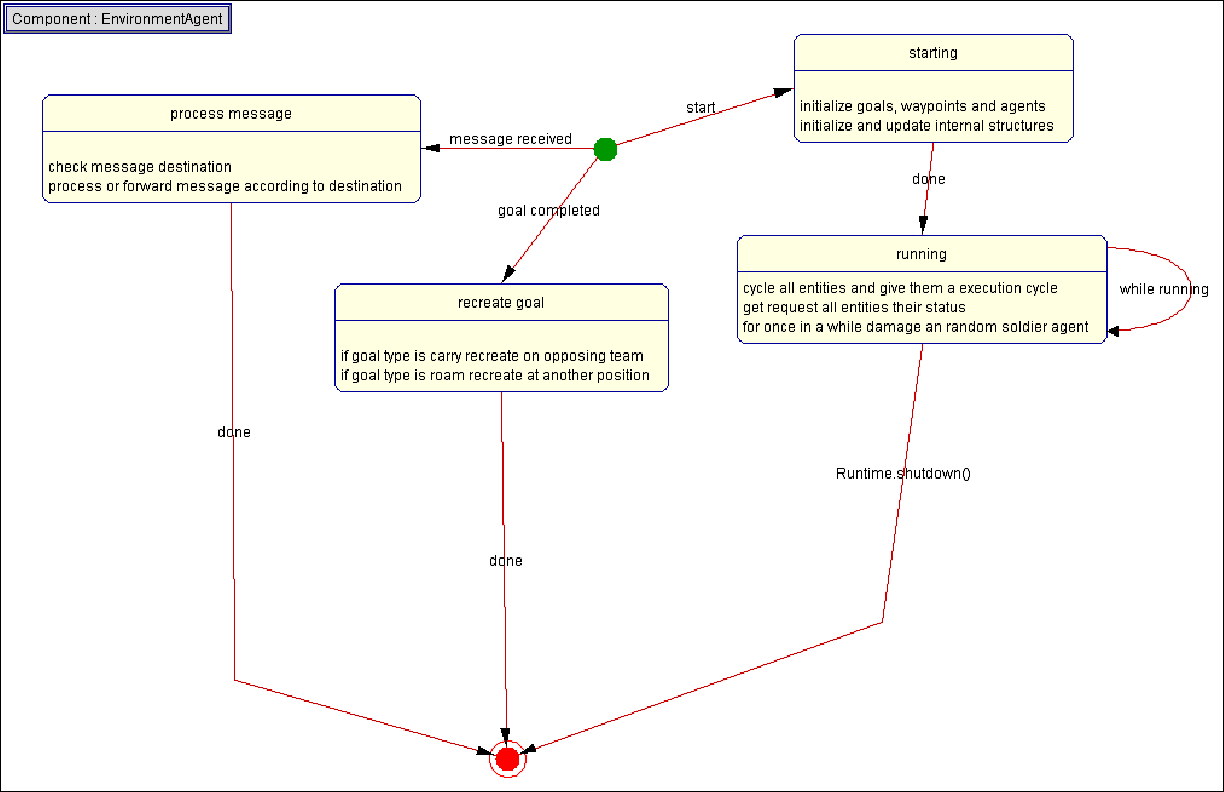

· The 'EnvironmentAgent' contains the main environment, as well as its details and characteristics, like existing entities (objects and agents), goals and messages. It also transmits messages between the other agents, known to that environment.

· Next, the spawn agents, whose task is to re-create 'dead' agents at a specific team location (also known as spawn, birth place). The agents spawn occurs at a timely fashion, specific to each faction, for example, ten in ten seconds for the 'red' team and fifteen in fifteen for the blue faction.

· Finally, the soldier agents are the 'living entities', executing tasks to complete their chosen goals. They also send messages between them to select those goals and to require facts about the environment.

Each faction has multiple goals to accomplish and currently, their completion has no specific order. Each goal is composed of a destination, if required, a single entity (corresponding to a real object or an organism, like a radio, a secret document or even an agent), and an implicit action applied to both the goal and its entity, like 'go to destination' or 'carry entity to destination'. The goals have also more properties, like the assigned agent, the corresponding team, priority, completeness, etc. On general, only one objective can be assigned to an agent, but in this project there won't be any planning of goals, so that the project can maintain an acceptable complexity. The expected goals are as follows:

3. Carry <Entity> from it's position to <Destination> (available to SoldierAgent)

4. Roam to <Destination> (available to SoldierAgent)

5. Spawn <SoldierAgent> at <Position> (available to SpawnAgent)

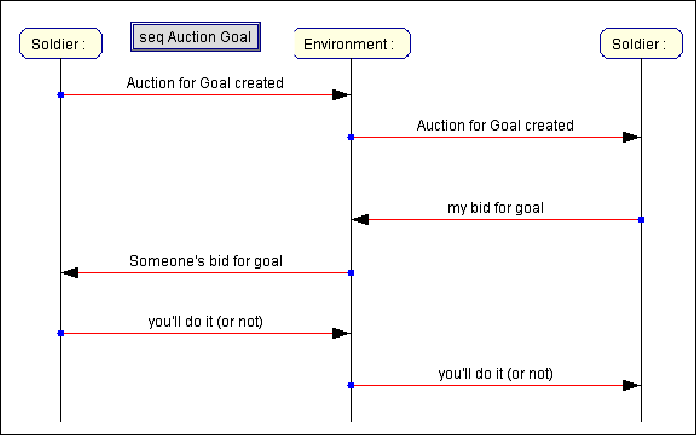

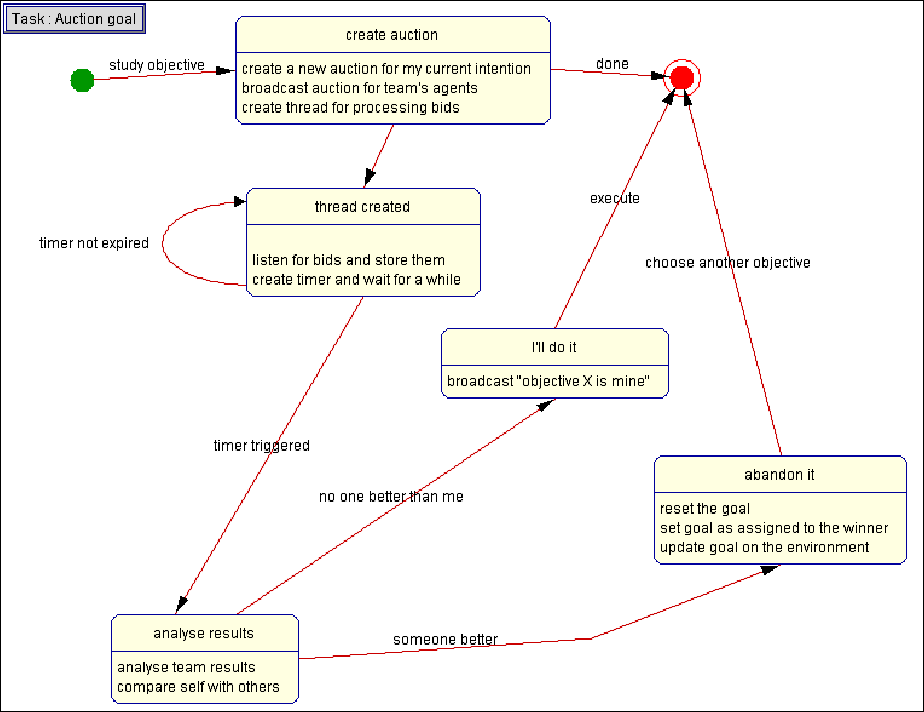

The goals when created are selected between the agents using a simple form of English auction. Basically, the agent in the best situation will get the goal (which is analysed according to distance, priority, agent availability and health), so when the goal is chosen by some agent, it will auction the acquired goal to its team and they'll exchange messages (with the bids, containing their distance to the target) to discover who gets it. In the end the auctioneer compares his distance with the others and in case there is someone nearer, it assigns his goal to the other agent.

On this final version, whenever an agent completes a goal, the environment recreates it so that there are always goals to be done. For instance, for the roam goal, when an agent completes it (in this case, arrives at the roam destination), the environment resets the goal's status and sets up another random destination point. In the case of the carry goal, when an agent finishes it, the environment changes the goal's team to the conflicting one so that the opposing team will carry back the payload to the original position. This way the agents are always travelling in the virtual world and the payloads never stay at the same place.

The traversal of the environment's paths was implemented recurring to previously encoded graphs of waypoints. There won't be any exploration and discovery of the waypoints because it will cause an unbalanced development between the factions. For an example, a faction may discover an objective but the opposite team will not be able to recover it, for the reason it doesn't know how to reach it. The waypoints are retrieved from a 3D model containing only geometry information. Basically the waypoints are got from the lines and faces stored in that file, which describe which waypoints (vertices) are connected.

In the following sections the 'MASE' planning details are specified, in the form of exposed diagrams taken from the Java application, 'agent tool'. They are shown in the planned order, as well as the same order in the application itself.

Finally, this is a specification of a planning, so it may not correspond exactly to the implemented architecture. Some details are expected to change, according to the system development.

a. Goal hierarchy

The goal hierarchy, as the name suggests represents the system goals (purpose) in a pyramidal setting. Each goal may be detailed in required sub-goals so that the system in its whole executes the required functionalities.

In our case the system is expected to simulate a game comprised of objectives. So it must initialize itself, load the objectives description from some files, as well as the waypoints (paths), show the state of the environment in a graphical window, create and maintain the agents and transmit the messages between them. The agents are divided in two factions, where each agent can have a specific role. In general, the agents will sense the surrounding environment (virtual world), choose an intent or goal from the list of available ones and travelling the internally chosen paths. Not to forget that the agents decide between themselves who will get a goal, so they will be constantly communicating, as well to request or transmit new goals.

b. Use cases and sequence diagrams

In the planning phase we lifted the following use cases:

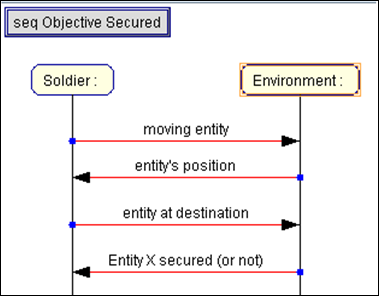

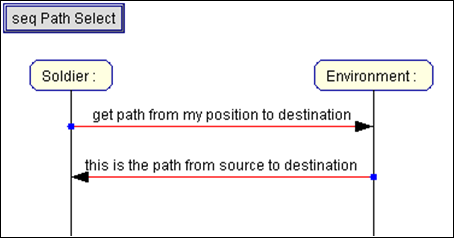

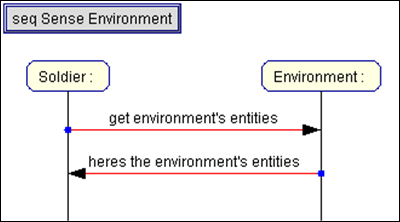

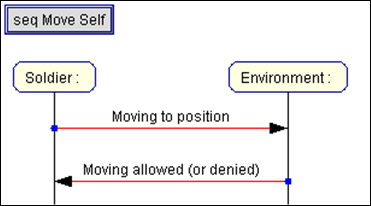

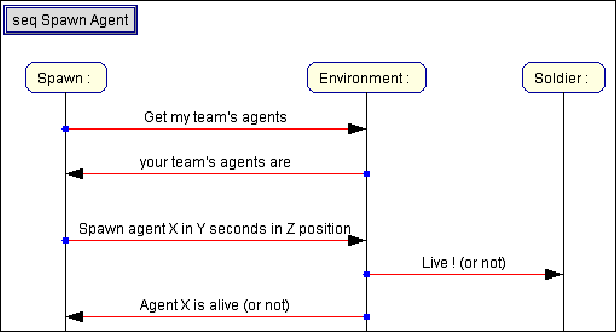

From which were done the next diagrams:

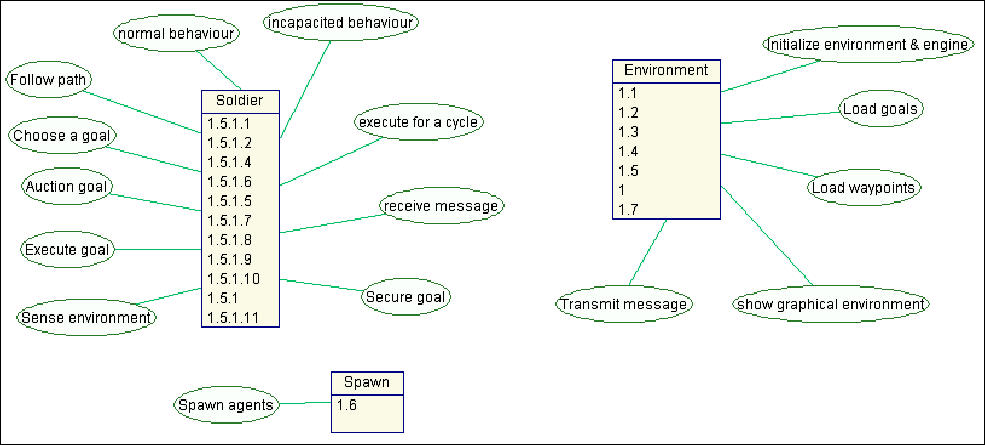

c. Role diagram

The use cases shown before are assigned to roles and associated tasks, as described in the above figure. Each of the roles will correspond to an agent class and its tasks to functions and behaviours. These are described ahead.

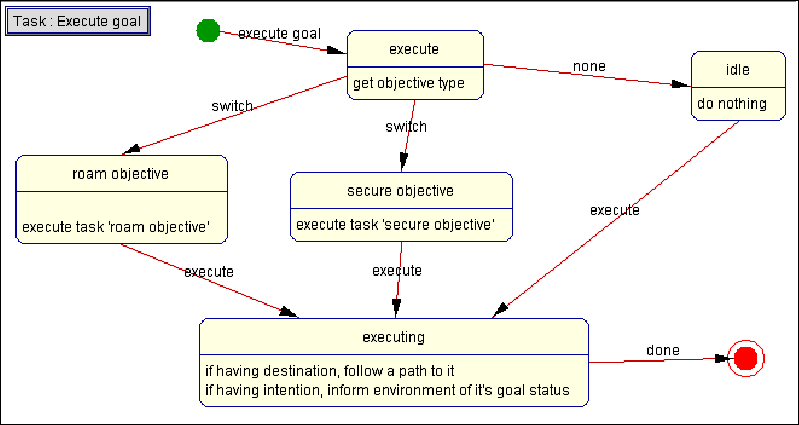

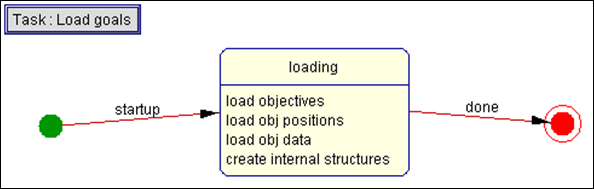

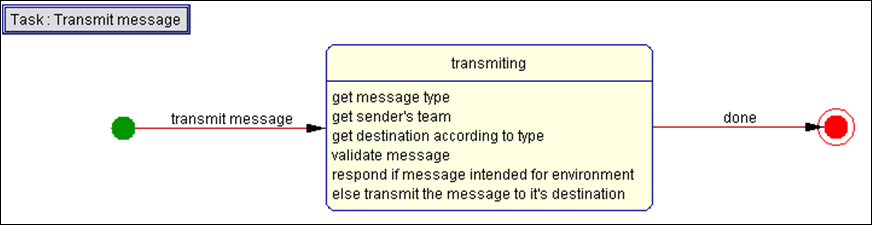

d. Task diagrams / panels

In this section we describe the task diagrams. Every time some agent chooses a goal, moves itself or an entity, it informs the environment (or the environment 'pools' the entities for this), and this communication is done through messages. Also, when a goal is complete the environment recreates it or an opposite one in the other team.

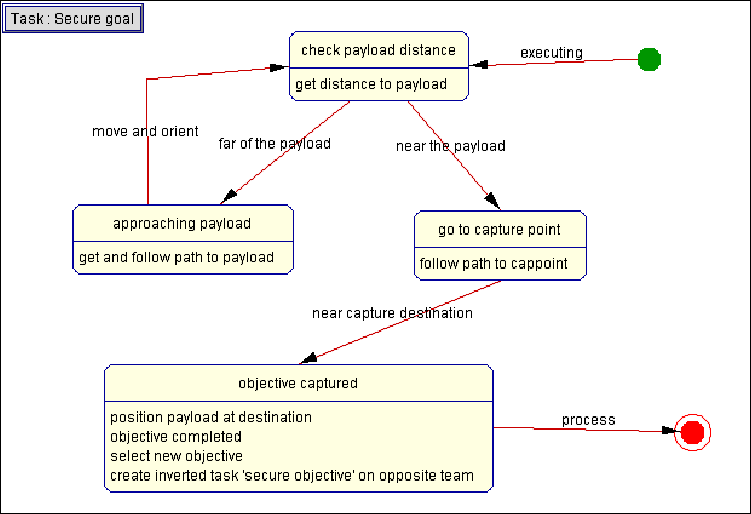

The "secure objective" task is executed whenever an agent takes an objective (entity). After the agent gets the objective, it computes a path to the capture point and follows it, so that when it arrives to the capture position the corresponding goal it's completed.

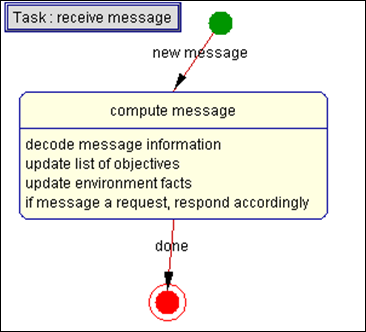

The "receive message" task basically decodes an incoming message and responds to it if required. According to the message type, the agent's internal environment representation is updated, like the list of known goals, the facts about the environment, position of entities, etc.

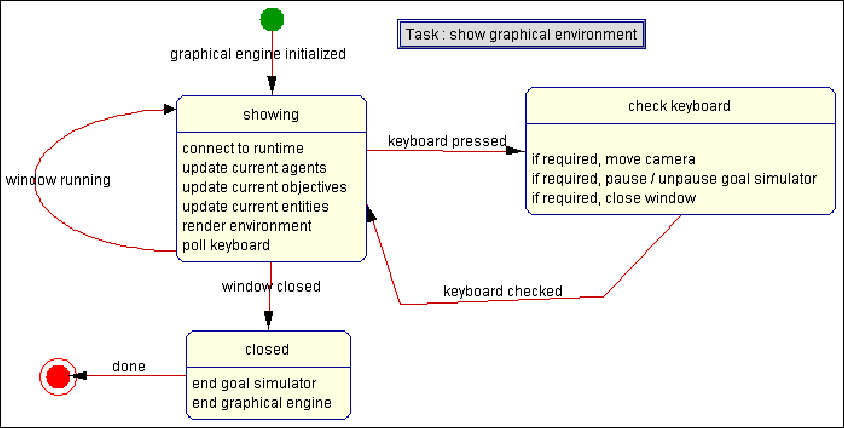

The task "show environment" shows on a window (or applet) the status of the environment, the entity's position, the agent's status, the goals and their status and more details. It also polls the keyboard, moves the camera, pauses or un-pauses the AI engine and quits the application if requested to.

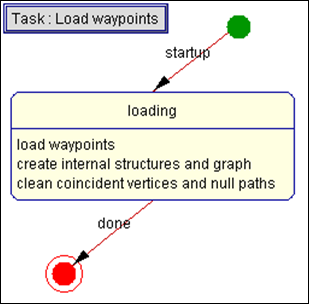

The task 'load waypoints' simply loads the waypoints description from a file (currently supports Wavefront obj, a 3D representation of a mesh) and creates a data structure representing the waypoints and allowed paths (connections).

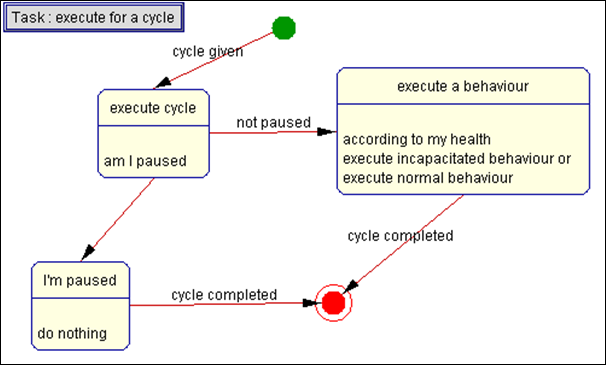

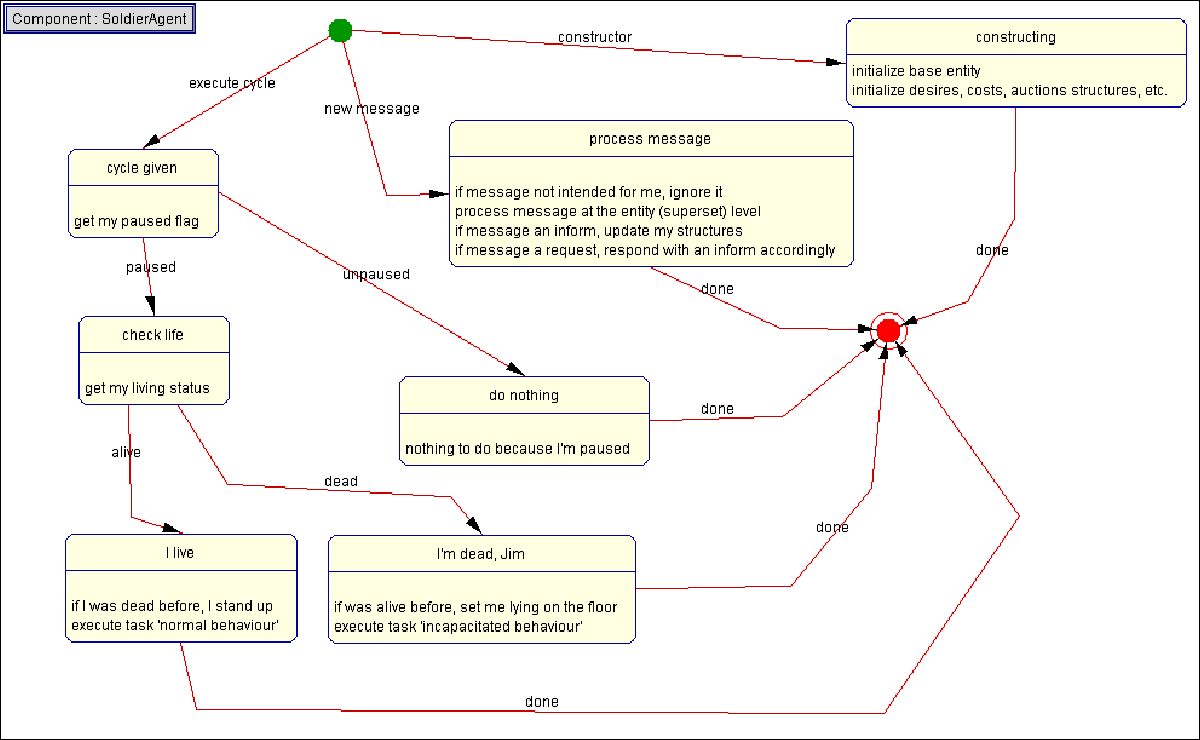

The task 'execute cycle' is called whenever an agent is given a cycle to execute in the machine (i.e., environment). According to the agent's pause flag, it may execute a behaviour (depending on it's health) or do nothing, because it's paused.

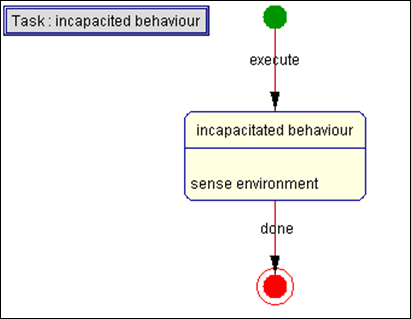

The task 'incapacitated behaviour' implements the sequence executed by the agent when its health level is to low to have a normal behaviour (i.e., when he's dead). In this case the agent only senses the environment.

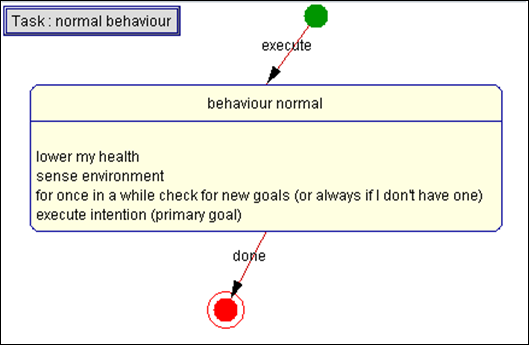

The task 'normal behaviour' executes the normal behaviour of the agent, i.e., when its level is above zero (alive). In this case the agent's health is slowly decreased (simulating his organism's tiredness), the agent senses its environment, scans for new goals (or sometimes if it already has one, to lower the overhead done by scanning) and executes it's primary intention.

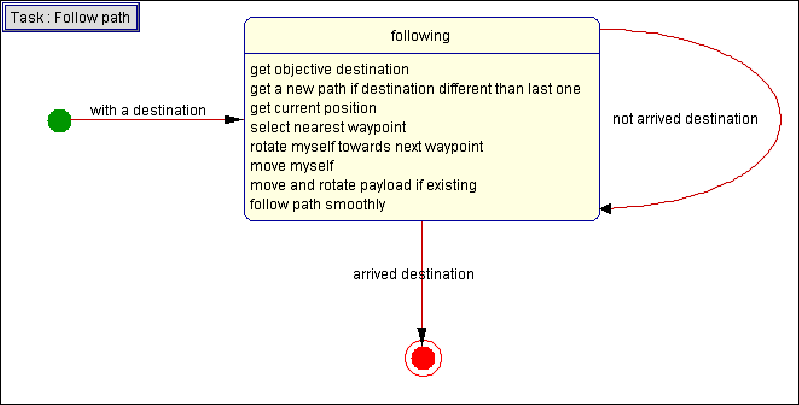

The "follow path" task controls the 'motor' system of each agent, making sure it follows one path from its current position to the goal's destination, doing it so smoothly. This task is also expected to follow the best and shortest path. According to the goal's destination, the agent gets a path and follows it's waypoints until it reaches the path's end. Also, if the agent has a payload it carries it with him.

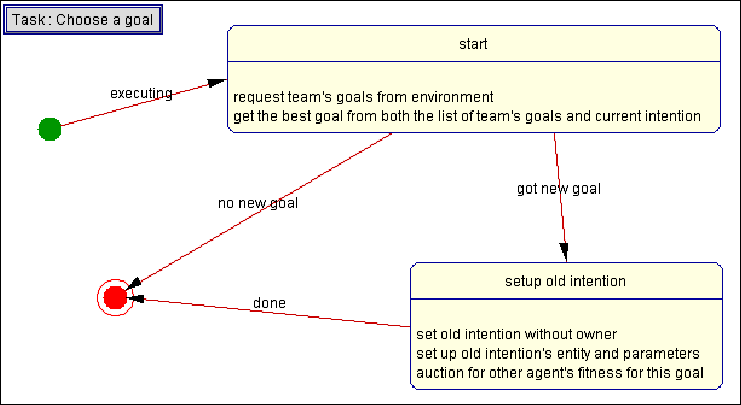

The task "choose an objective" gets and discusses the goals between all the team's agents. It also 'cleans' old intention (if the agent got a new one) so that it can be executed by another agent.

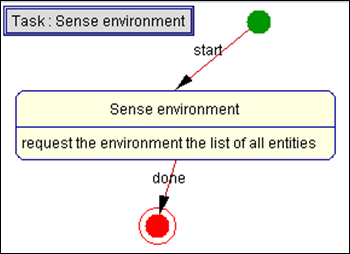

The "sense environment" is being constantly executed, even when the agent is incapacitated. It sends a message to the environment agent, asking facts about the neighbourhood. The environment then responds and the current agent decodes the message, updating its internal structures (not shown in the above diagram).

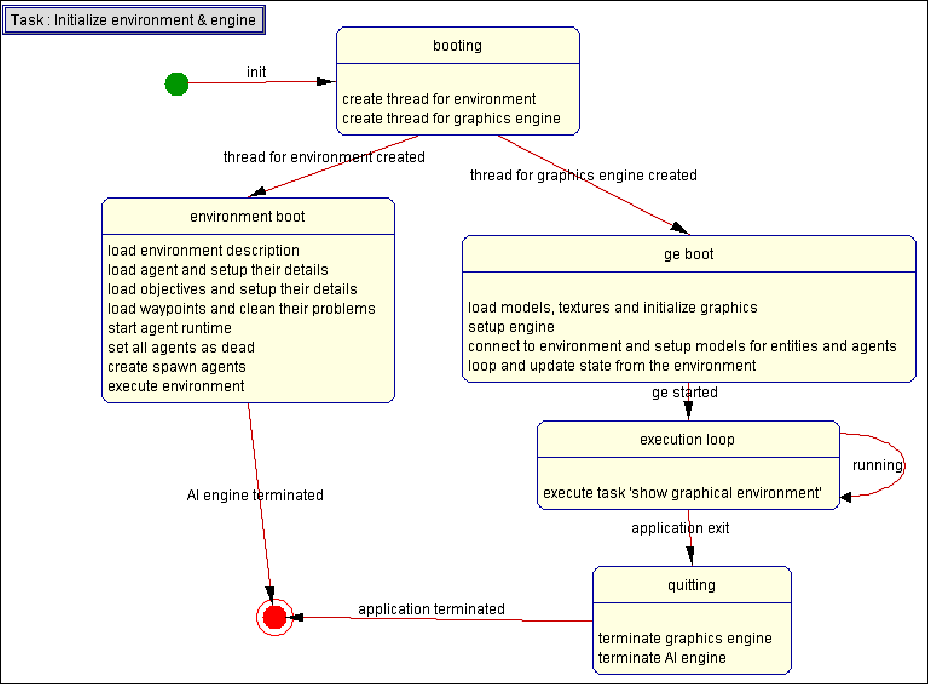

The "initialize environment" task corresponds basically to the system's boot. It loads all the necessarily information, initiates data structures, threads, agents, graphical engine and then starts the system's execution.

The task 'execute objective' gets a goal from the agent's list of goals, and according to its priority selects one and executes it.

The task 'load objectives' load the goal's information from a file and updates the system.

The task 'transmit message' is executed by the environment system, where it transmits the messages received from some agent to another one. If the message was intended to the environment, then it decodes it and responds accordingly. After the sending the message, the environment queues the message, where it is checked by the task "check message delivery", executing in parallel.

When an auction is created for a goal, the agents interact between them to choose the most suited one for the goal (basically the one nearer the objective). This is done on the task 'analyse objective' where the auctioneer creates a thread for this purpose (so it can do it's behaviour in parallel), the other team's agents bid their distance to the objective (goal's destination or payload) and in the end the initial agent compares the results to see who gets the goal. In this architecture, only the agents having no intention (or when their intention's priority is lower than the auction's goal) bid. If the winner of the auction is nearer the objective than the auctioneer, the objective is given to the winner (after some cleaning and setup so the last one can execute it).

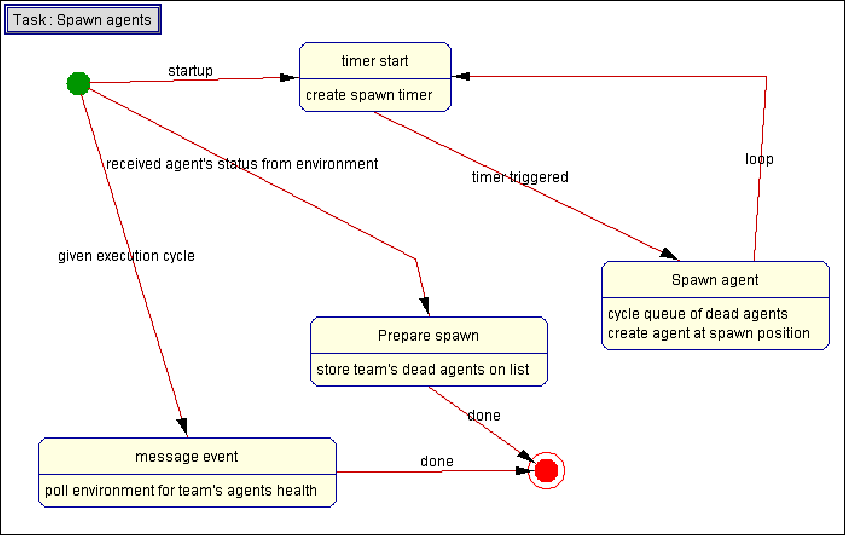

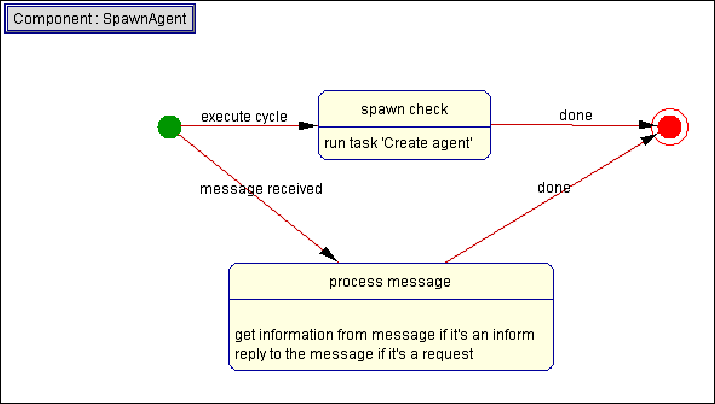

The diagram above corresponds to the task "spawn agents", "executed by the spawn agent to revive dead soldier agents of its team. When the spawn agent starts, it creates a timer for when it triggers, it revives the spawn's team dead agents. Every time the spawn agent is given a cycle to execute it gets the list of dead agents from the environment (actually it gets all agents, but filters the living ones) and stores this information internally. After that, if the timer has triggered, the spawn agent checks this list, repositions and heals the dead agents near its position.

e. Agent template diagram and conversations

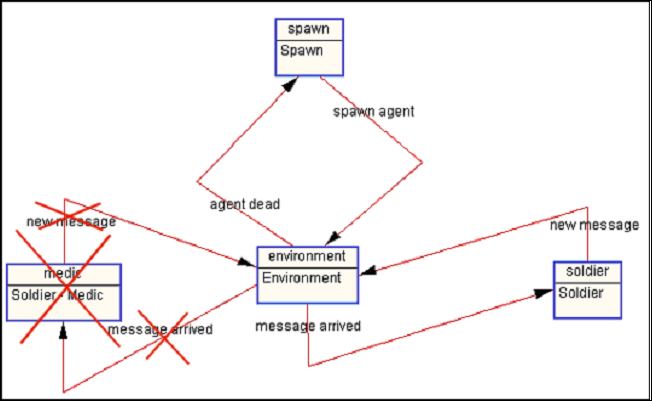

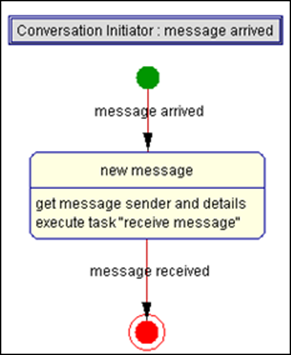

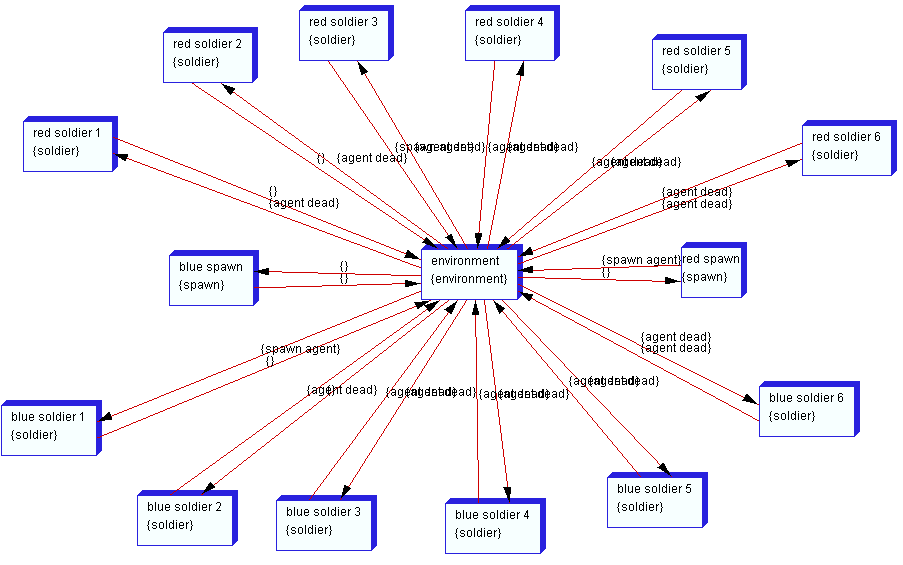

The diagram shown before shows the conversations between the system agents. For the spawn agent, the 'agent dead' actually comprises of scanning for it's team's dead agents, and the 'spawn agent' message corresponds to the repositioning of the dead agent, as well as it's revival. For the others, the conversations comprise on the transmission and receiving of messages (done through the environment).

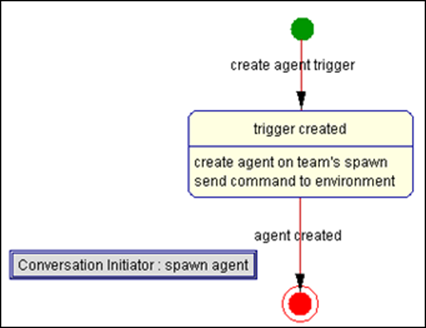

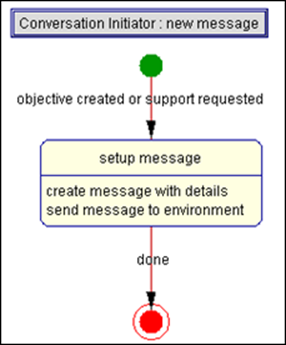

When an agent dies, and the environment is requested to inform the spawn agent of this, it informs the spawn agent of the agent's death. The conversation 'spawn agent' happens when the spawn agent decides that it is time to create a new soldier agent (after it's spawn timer triggered). It then transmits the event to the environment, where it will create and setup the new born agent. The 'new message' conversation occurs when some agent creates and sends a message to the environment (for the environment itself or for another agent). The 'message arrived' happens after the last one, when the environment has a message to deliver to some agent.

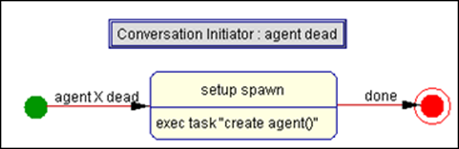

f. System classes architecture and Ontology

These next diagrams expose the final system classes and some enumerations, as well as the ontology, which are basically the same in our project. This happens because the content of the messages transmitted between the system's agents (concepts and facts) are done using these classes. The slots in the ontology correspond to the field variables in a class design. A simplified view of the classes is shown below:

The above classes are stored in the package CK.IA and these are detailed next:

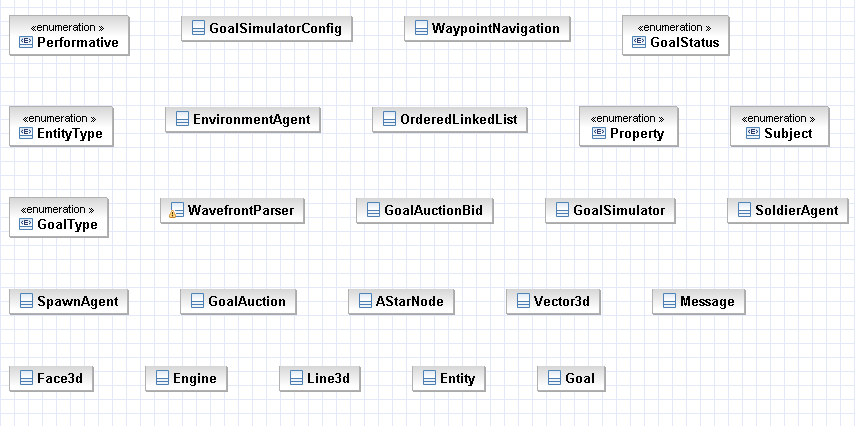

· First of all, there's a class holding all the configuration of the system, GoalSimulatorConfig. Any detail of the system, like spawn times, directories, health, positions, etc. are set up here.

· Next, there's the class Entity, superset of both SoldierAgent and SpawnAgent classes. It contains a counter which distinguishes all entities, its position, health, rotation angles and some other flags and some functions to handle the class fields and receival of messages. As all the agents (except the environment) have these properties, they 'extend' this base class. This class basically represents an entity with radius and a position in the environment.

· Related to the above described class, there's an enumeration holding information about all the types of entities. This enumeration is called EntityType and it's used to distinguish the specialization of the extended class. The current enumerations are ENTITY, SOLDIER_AGENT and SPAWN_AGENT.

· The SoldierAgent and SpawnAgents implement the actual autonomous entities in this virtual world. They execute their behaviours and communicate between themselves and their environment, as well as interacting with other entities for the purpose of achieving their goals. As discussed earlier, the soldier agent has as goals roaming (travelling) the world and carrying payloads from a source to a destination. The spawn agent checks for dead soldiers and respawns these near the spawn location.

· The EnvironmentAgent setups the goals, agents and transmits messages between them (being the actual transmission link). It also stores all the information about the environment, existing entities and goals. Whenever an agent asks a fact to the environment (like sensing its neighbourhood) the environment responds with a message holding the required information. Resuming, when an agent wants to know about facts, it asks the environment.

· The Engine class interacts with the environment and shows the user a very nice 3D representation of the virtual world. Additionally, it polls the keyboard, pauses the system and allows the user to end the 'simulation'.

· The WaypointNavigation and WavefrontParser loads, processes and generates paths from the waypoints, stored on Wavefront OBJ files, which can be easy edited in any decent 3D editor. The algorithm the system uses to generate paths is the A*, which has complexity O(n³) (cubic) and always gives the shortest path from a source position to a destination. These paths are requested by the soldier agents regarding their traversal of the environment.

· The Goal classes keeps information about goals, their priority, the owner, the assigned team, their type (currently ROAM and CARRY), the related entity (currently a payload, related to the CARRY goal), the owner, etc.

· The Message class holds and transmits the ontology concepts between all the agents. It has a unique identifier (statically increased with each new created message), a performative (request or inform), a subject (the class of its content or subject matter), some property regarding the subject (allowing more detailed parameters about the subject), the sender and the destination entities, etc.

· The Vector3d represents a tri-dimensional vector or position in space, stored in double precision.

· The AStarNode and OrderedLinkedList are used for easy implementation of the A* algorithm. The first one is used to store the heuristic and distance values and the second one imposes an order in these A* nodes.

· The Face3d and Line3d simply maintain a list of 3D vectors (vertices). They're used by both the path navigation system and Wavefront parser.

· The GoalAuction and GoalAuctionBid manage the goal auction system. The GoalAuction class is used by the auctioneer to communicate to the bidders there's a new goal auction going on. The bidders respond to the auctioneer with a message containing the GoalAuctionBid class, carrying their bid value and the related auction.

· Finally there are the enumeration classes, used by the messaging system to relate the transmitted and received messages to performatives, subjects and properties. Clearly, these are the enumerations Performative, Subject and Property.

After the above description, a more detailed class diagram is shown, excluding associations and dependencies, but with the inheritance relations:

g. Agents classes internal states and execution

The following diagrams represent the agents and their states throughout their 'life':

To simplify the internal agent states, some of the figures shown above recur to the role tasks diagrams documented before.

h. Architecture deployment

The following diagram exposes one and simple system deployment with two teams, each one with six soldier agents and one spawn agent:

The simple architecture deployment implements three soldiers, one medic and one spawn per faction. Obviously, only one environment exists. As said before, a programmer can alter the system's configuration to add more agents (but only one environment and one spawn per team), keeping in mind that the system will possibly run slower with many agents (and entities). The lines between the boxes (agents) represent the communication, in both ways between all the agents.

In this diagram the arrow's names are incorrect, caused by possible bugs in agent tool. According to the agent template diagram, the conversations from the soldier / medic agent to the environment are titled 'new message', and the ones on the opposite direction, from the environment to the agents are titled 'message arrived'.

First of all, download the program from this link:

For its execution, the application requires libraries for jinput, lwjgl and openal. In the above arquive we bundled all the native dynamic linked libraries of lwjogl, but we only tested the versions for windows, so for running on another OSes the user should search for documentation on how to do this. These are stored in the 'libraries' folder, as well as their java counterparts (jar).

And extract using your favourite decompressing software. The program was compressed using 7-ZIP which is free software. To run the application, on windows, the user can simply execute the batch file 'run GoalSimulator.bat'. This will launch the Java runtime, link it to the libraries and start the application. When the programs stops loading all the required files (models and textures) and initialized both the graphical engine and AI logic, the user is presented with 3D view of the environment, as shown next:

From this moment, the user may use the keyboard to follow the world (mouse view is not currently implemented). These are the available keys and their function:

· 'A' & 'D' - strafe camera left / right (on its Z axis)

· 'W' & 'S' - move camera forward / backward (on its X axis)

· 'Q' & 'E' - rotate camera's roll left / right

· 'R' - reset camera to both initial position and default orientation

· 'T' - set default camera orientation (looking down the X axis, up vector parallel to Y axis)

· 'C' - debug camera position and orientation (shown on the console)

· 'CTRL' & 'SPACEBAR' - move camera up / down (on its Y axis)

· 'F1' - toggle full screen / window mode

· '←' & '→' - rotate camera's yaw left / right

· '↑' & '↑←→↓' - rotate camera's pitch up / down

· 'U' & 'P' - un-pause and pause simulator (AI engine)

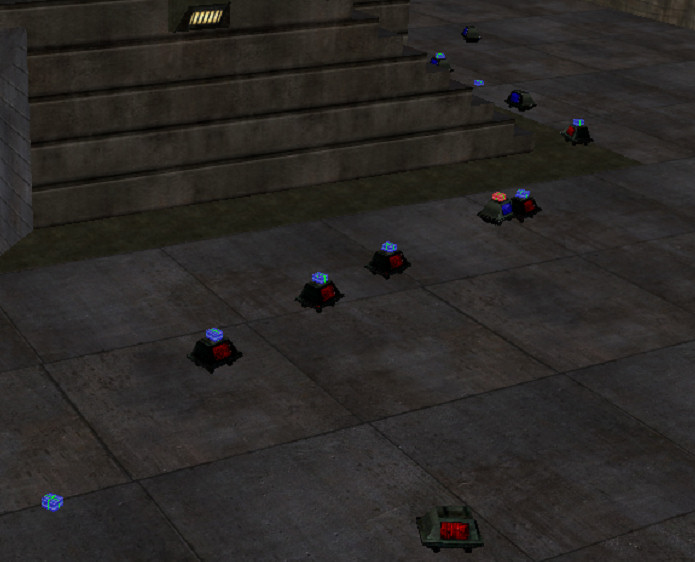

The following images show a little of the expected action going on the application:

The above image shows both team's agents carrying their payloads (actually, gifts) to their destination. There are also three agents not carrying gifts, but their goal is probably to approach some gift or arrive to a roam position.

In the last image we have some 'dead' agents, waiting for their revival by their team's spawn. When an agent carries a payload and dies, the payload is randomly dropped near the agent's body (shown on both nearest red agents).

To the moment several concepts and techniques were learned. MASE planning, project roles and task's study are, in our opinion, very important to the arrangement of the project. But in our highest opinion the used tool, 'agent tool' leaves also a lot to desire. Better interligation between the diagrams and reuse of the work done before could be improved. After using the software, we have a feeling that we did some irrelevant diagrams as well as some study.

We also learnt the concept of BDI agent, which relies heavily on beliefs and judges them to compute intents (basing on the agent's desires), and it is used on this project. Furthermore, we learnt how to use ontologies and to use them in the communication between the agents.

Finally another opinion is that MASE planning is basically the waterfall model (Software engineering planning) applied to an agent's system development. This model has some problems, being the critical one the fact that it does not easily allow correction of previous steps. And for waterfall planning, any other software engineering tool like IBM Rational Software Architect, Sybase Power Designer or Eclipse can be used, but probably missing some 'Artificial Intelligence' concepts, while on the other end they give a much better user interface and functionality.

Additionally, we learnt programming practices regarding multi-agent systems optimization and how create and maintain a graphical engine for the system.

To the time, this project has required approximately 36 (phase 1) + 24 (phase 2) + 350 (phase 3) work hours, including initial study, research and development. The impressive 350 hours for the third phase where not only caused by the implementation of the actual artificial intelligence system, but also by the design and study of the 3D graphical engine, waypoints, environment map and models.

jPCT

Lightweight Java Game Library (LWJGL)

Omni-bot Wiki

http://www.omni-bot.com/wiki/index.php?title=Main_Page

Wolfenstein: Enemy Territory

http://en.wikipedia.org/wiki/Wolfenstein:_Enemy_Territory

Omni-bot Official Website

Gamasutra - Handling Complexity in the Halo 2 AI

http://www.gamasutra.com/gdc2005/features/20050311/isla_01.shtml

Waterfall Paradigm

http://vva.dmso.mil/Special_topics/Paradigm/waterfall.htm

http://en.wikipedia.org/wiki/Waterfall_model

MASE and agentTool

htp://macr.cis.ksu.edu/projects/agentTool/agentool.htm

http://macr.cis.ksu.edu/projects/mase.htm

http://people.cis.ksu.edu/~sdeloach/publications/Conference/agentTool%20ATAL%202000.pdf

http://people.cis.ksu.edu/~sdeloach/publications/Conference/mase-aose2000.pdf

http://people.cis.ksu.edu/~sdeloach/publications/Conference/MaSE-maics20001.pdf

http://www.cis.ksu.edu/~sdeloach/publications/Journal/MaSE%20-%20IJSEKE.pdf

http://www.pa.icar.cnr.it/cossentino/al3tf1/docs/mase4agentlink.pdf

Protégé

http://protege.stanford.edu/doc/tutorial/get_started/table_of_content.html

http://protege.stanford.edu/doc/users_guide/index.html

Belief-Desire-Intention Agent Model

http://en.wikipedia.org/wiki/BDI_software_agent

http://en.wikipedia.org/wiki/Belief-Desire-Intention_model

http://www.cs.huji.ac.il/~imas/readings/atal98b.pdf

Agent Oriented Software Engineering with INGENIAS

http://sirius.lcc.uma.es/iberagents/gomezsanz.pdf

Search algorithms

http://www.algorithmist.com/index.php/Floyd-Warshall's_Algorithm

http://www.cs.ucf.edu/~reinhard/classes/cop3503-fall03/floyd.pdf

http://www.algorithmist.com/index.php/Dijkstra's_algorithm

http://olympiad.cs.uct.ac.za/presentations/camp1_2008/search_algos.pdf

http://csci.biola.edu/csci480spring03/FloydWarshall.pdf

http://en.wikipedia.org/wiki/A*_search_algorithm

http://theory.stanford.edu/~amitp/GameProgramming/

Wavefront file format

http://www.martinreddy.net/gfx/3d/OBJ.spec

http://en.wikipedia.org/wiki/Obj

http://www.eg-models.de/formats/Format_Obj.html

http://www.robthebloke.org/source/obj.html